Reviews

CGarchitect's Graphics Card Round Up

CGarchitect Graphics Card Review Roundup

by CGarchitect and Renderstream

Anyone who has worked in this industry for any length of time has at one point found themselves specifying a video card for their rendering workstation. The seemingly endless lineup of models and often cryptic specifications make selecting the best card a daunting task. Most rely upon product reviews from the gaming industry and/or whatever their budget will allow. Neither however provide the insight required to properly choose a card in the visualization field. The purpose of this review is to evaluate some of the latest cards in the industry in the context of viewport performance for architectural visualization professionals.

In years past there were a number vendors all vying for the CG market, but today the vast majority of the industry uses one of two manufacturers: NVDIA or ATI. In our recent industry survey of the architectural CG market, we determined that 86% of our site visitors use NVDIA cards with 22% ATI cards. Worth noting is that respondents could choose multiple manufacturers, which is why the total exceeds 100%. It does however show the significant skewing towards NVIDIA products. Despite this significant difference in market share this review will examine six different ATI card and six NVDIA cards. While the vast majority of cards we tested came from the professional series lines from both vendors, we also wanted to evaluate the long held and largely anecdotal belief that the Radeon and GeForce cards perform as well as the professional series cards for a fraction of the cost. Finally, with the help of RenderStream, we are also going to explain some of the terminology used on both the NVDIA and ATI websites in describing the video card specifications. Coupled with our in-house performance benchmarks, we hope this review will be the first of its kind to help provide real-world insight into graphics cards in the professional visualization industry.

The Tests

While GPU compute tests will become more relevant in the months and years ahead, there are still not enough publically released applications to effectively compare cards at this point. As such this review will focus exclusively on viewport performance tests in a number of widely uses applications within our industry. The applications we tested were AutoCAD 2010, AutoCAD 2011, Google SketchUp 7.1, 3ds Max 2010, 3ds Max 2011 and Cinema4D's Cinebench. In AutoCAD and 3ds Max, both the NVDIA performance drivers and the DX9 drivers were tested. In our results graphs below, we only report the results from the NVDIA performance drivers in AutoCAD, but report both DX9 and performance drivers in 3ds Max. All ATI cards for 3ds Max and AutoCAD were tested using DX9 drivers, as ATI does not currently offer a performance driver for their FirePro cards.

While we recognize that this does not cover every application used in our field, it does represent those most widely used. Some of you may also be familiar with the SPECviewperf benchmarking application, so we wanted to comment on its exclusion from this article. When we first started this review the SPECviewperf application currently available was grossly out of date, so we opted not to include this in our testing. Just as we were about to go to press, SPECviewperf 11 was launched and represents the most up to date version of this benchmarking application. In examining the datasets provided in this release we noticed that 3ds Max and AutoCAD had not yet been included and instead focused heavily on CAD/CAM modeling apps. As such we opted to forgo using it for testing until more relevant datasets become available.

The Methodology

Our AutoCAD tests included three models: An office chair, a large commercial office tower and a small residential house. Using a custom script each model was rotated about its origin on two separate axes using five different viewport shading modes (wireframe, hidden line, flat, conceptual and realistic). Each test was run three times to obtain an average frame rate to rotate the model on screen.

Our SketchUp test included a single model of a large external commercial space. Using the built in Ruby script command "Test.time_display" we were able to rotate the scene about the origin in three different viewport rendering modes: Shaded w/text, shaded w/ text and X-Ray, and Wireframe. Each test was run three times to provide an average frame rate to rotate about the scene.

Our 3ds Max tests consisted of two scenes. The first was a large commercial external scene with several very large polygon tree models. Using a custom maxscript we recorded the time it took to run 100 frames of an animation path and determined a FPS (frame per second) rate. Our camera path flew around the extents of the scene. This test was run three times to obtain the average framerate. The second scene is a long textured Greeble tunnel with a camera path that flies down the length of the tunnel. Every polygon is visible throughout the entire camera path, diminishing as the camera approaches the end of the tunnel. Worth noting is that our original test script used the built in 3ds Max frame rate indicator. At the last minute we found out that it can actually report grossly inaccurate results. We're still investigating this with Autodesk, but our new script complete removed this built in indicator from the equation to ensure accurate results.

Our final test is the packaged benchmark application - Cinebench, which was created by Maxon. While the test benchmarks both CPU and GPU performance, we only ran the GPU (OpenGL) test which records the average framerate of a vehicle chase scene. We feel that this test provided the least relevant information as it tests real-time rendering typical of games or real-time engines, rather than the more useful and used viewport performance involved with modeling and navigating architectural scenes.

The Testing Hardware

All tests were run on a custom built workstation using an ASUS P6T6 Workstation Revolution motherboard with a single Intel Core i7 920 2.88 GHz 8MB processor with a 1000W Corsair Power supply. The test machine has 12GB of Mushkin XP3-12800 SDRAM and two 128 GB Patriot Torqz SSD drives in RAID 1 configuration. The operating system is Windows 7 Extreme 64 bit.

The Video Cards Reviewed

We would like to thank NVDIA, AMD and RenderStream for providing us with all of the graphics cards tested in this review. We would also like to thank RenderStream for providing the 3ds Max Tunnel test scene, MacLaine Russell from WENDEL DUCHSCHERER Architects & Engineers for providing us the SketchUp test scene, and finally Branko Jovanovic who provided us the exterior 3ds Max test scene. CGarchitect would also like to thank Mark Olson for programming our custom testing script for 3ds Max.

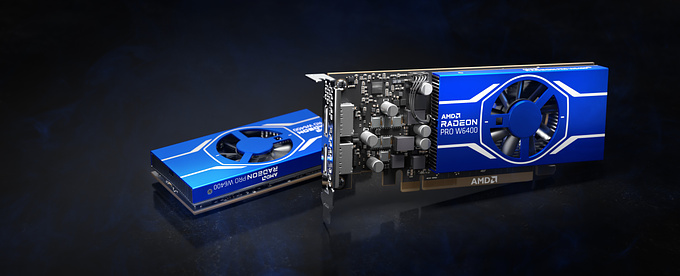

We reviewed the following display cards in this review: NVIDIA Quadro FX4800/3800/1800/580, ATI FirePro V8750/8700/7750/5700/3750, Radeon HD5870, GeForce GTX285/480

Chart Outlining the Cards Reviewed in this Article and their Specs.

Useful Links

http://www.xbitlabs.com/articles/video/display/quadrofx-firepro_10.html

NVIDIA

http://en.wikipedia.org/wiki/Comparison_of_Nvidia_graphics_processing_units#Quadro

ATI

http://www.amd.com/us/products/workstation/graphics/ati-firepro-3d/Pages/product-comparison.aspx

http://en.wikipedia.org/wiki/Comparison_of_AMD_graphics_processing_units

The Results

All of the Average Rank graphs below represent the ranking of all tests for each model. The numbers overlaid on the bars are the actual rank for the model/scene. The shorter the bar, the better the overall performance.

All of the other graphs represent the frame per second obtained for each scene/model and viewport rendering model where applicable. The taller the bar the better performance.

AutoCAD

Average Rank of All Tests - AutoCAD 2010 (Shorter is better)

Average Rank of All Tests - AutoCAD 2011 (Shorter is better)

AutoCAD 2010/2011 - Wireframe (FPS) (Higher is better)

AutoCAD 2010/2011 - Hidden (FPS) (Higher is better)

AutoCAD 2010/2011 - Flat (FPS) (Higher is better)

AutoCAD 2010/2011 - Conceptual (FPS) (Higher is better)

AutoCAD 2010/2011 - Realistic (FPS) (Higher is better)

3ds Max

Average Rank of All Tests - 3ds Max 2010 (Shorter is better)

Average Rank of All Tests - 3ds Max 2011 (Shorter is better)

3ds Max 2010/2011 - Wireframe (including NVDIA Performance Drivers - PD) (FPS)

(Higher is better)

3ds Max 2010/2011 - Hidden (including NVDIA Performance Drivers - PD) (FPS)

(Higher is better)

3ds Max 2010/2011 - Smoothe w/ Highlights(including NVDIA Performance Drivers - PD) (FPS) (Higher is better)

Google SketchUp

Average Rank of All Tests - SketchUp (Shorter is better)

SketchUp - All tests (FPS) (Higher is better)

CineBench

Average Rank of All Tests - CineBench (Shorter is better)

CineBench - All tests (FPS) (Higher is better)

Consumer Cards vs Professional Series Cards

As the results indicate, in many cases there is not a large difference between the consumer series cards and the professionals cards and at times the consumer cards even outperform. To ensure this is a balanced review and provides information required to make an informed decision, I've outlined some of the differences and benefits of the more expensive professional cards. I'll leave it up to you, the reader, to decide how important those items are for your pipeline.

NVIDIA

The following information was provided unedited from NVIDIA.

Why Choose the Quadro Workstation Board Over NVIDIA GeForce?

Today’s high performance consumer graphics boards while possessing extremely powerful graphics performance, are primarily designed for gaming performance, whereas professional visual imaging and 3D applications have more intricate needs that require additional features and a different balance in some aspects of the graphics boards features.

NVIDIA Quadro solutions offer features that provide additional functionality required by professional creative professionals:

• Accelerated Video Playback using the Adobe Mercury Playback Engine: The new Mercury Playback Engine in Adobe Premiere Pro CS5 give video professionals the ability to work with complex HD timelines in real-time without having to generate preview renders to see their final output. NVIDIA Quadro graphics are the recommended solution by Adobe for video professionals. (Supported on Quadro FX 3800, FX 4800 and FX 5800).

• Support for Professional SDI Output: Quadro FX also provides support for professional video output via SDI for film and video post-production and broadcast graphics. The FX3800/4800/5800 support 2 channels of SD or HD-SDI output.

• Application testing and certification: All NVIDIA Quadro FX graphics boards are consistently tested and certified with dozens of leading professional applications, providing guaranteed compatibility, stability, and optimization with the key professional applications in MCAD, DCC, oil and gas, and scientific visualization markets.

• Custom application drivers and utilities: NVIDIA Quadro FX boards offer enhancements vital to graphics professionals, including the 3ds Max Performance Driver for Autodesk 3ds Max and the accelerated AutoCAD Performance Driver for AutoCAD users.

• Support commitment of three years: NVIDIA provides support and driver updates for all NVIDIA Quadro graphics boards for three years from the date of introduction.

• Planned availability of boards for 18 months: With NVIDIA GeForce and other consumer cards, there is no guarantee how long the card will be available, but NVIDIA Quadro boards typically have an availability of 18 months, providing a more stable IT platform across your enterprise.

• Enhanced driver configuration: Designed to support the more varied needs of professional applications, Quadro drivers let users adjust settings for texture memory size, buffer flipping mode, anti-aliasing line gamma, texture color depth, stereoscopic display settings and overlay control–all of which are unavailable on NVIDIA GeForce cards. This allows users to customize DirectX and OpenGL settings that are important for professional 3D, Professional Imaging and Visualization applications.

• Support for G-Sync: The Quadro FX 5800, FX 4800 and FX3800 can utilize and optional G-Sync board to provide advanced multi-system visualization and multi-device film and video environments. The Quadro G-Sync supports frame lock, swap sync, stereo sync, and house sync.

• Support for SDI: The Quadro FX 5800, FX 4800 and FX3800 also provide support for professional video output via SDI for film and video post-production and broadcast graphics. The boards support 2 channels of SD or HD-SDI output, and digital and analog genlock.

OpenGL feature hardware acceleration and support:

• Hardware overlay planes: Enhances performance of 3D graphics when obscured by the cursor, pop-up menus, and other visual enhancements.

• Hardware antialiased lines: Vastly improves the display of 2D and 3D wireframe views in design and visualization software, at a higher performance level than on GeForce, and without taking extra video memory for oversampling.

• Two-sided lighting: Renders the front and back sides of triangles to ensure visibility of surface meshes and cutaway views, no matter the viewpoint.

• Windowed OpenGL quad-buffered stereo: Unavailable on GeForce cards, quad-buffered stereo gives designers a real-life perspective on their models while maintaining full double-buffering for the left and right views.

• Hardware-accelerated clip regions: Designed to handle the many windows and dialog boxes that can impinge upon the 3D graphics window, NVIDIA Quadro FX boards include up to eight clip regions, as opposed to the single clip region offered on GeForce cards.

ATI

ATI FirePro products are optimized and certified for many CAD and DCC applications.

ATI FirePro offers dedicated driver support via regular Catalyst software updates that are based on certified proven testing with leading professional graphics applications.

AMD offers direct customer access to a dedicated professional class technical support team.

Background Technical Information

One of the things I've always found confusing about comparing video cards has been the individual specifications of each card. Most of the terms used in these comparison tables often don't make any sense to most people without a lot of extra research. We've partnered with the very knowledgeable guys over at RenderStream for this review and they have provided a glossary to help explain some of the main terms you will run into on vendor websites. While this information is quite technical, we hope it will act as a one stop resource for people in our industry who want to delve a bit deeper into the card specifications. This information coupled with the test results above should provide the necessary information to make an informed purchasing decision.

GLOSSARY OF TERMS (Warning Very Technical)

Cuda Cores /Shader Processing Units

CUDA cores mean these are cores that can run CUDA (an acronym for Compute Unified Device Architecture), which is a parallel computing architecture developed by NVIDIA. CUDA is the computing engine in NVIDIA graphics processing units (GPUs) though it should be mentioned that it has been also used to successfully compile code for multi-core CPUs. These floating point processors are arrayed in graphics cards to do stream processing of data used in this article for visualization. Nvidia and ATI use significantly different chip architectures to accomplish the same thing. For the equivalent GPU ATI chips have many shader processors that with optimized code can process up to five simultaneous instructions whereas Nvidia processes them serially through the shader processer. The issue is the level that the 5X processing capability of the ATI can be fully utilized is nontrivial and the technology appears still to be maturing. At the end of the day the typical capability will be of the same order as the Nvidia processor. Then it becomes other parts of the chip functionality and again how the software utilizes it that will determine ultimate performance. [ref: pages 3-8 in http://www.anandtech.com/show/2556/1]

Stream Computing

Streams, or threads, are a collection of elements requiring similar computation and because of this similarity provide an opportunity for parallelization. A shader (or Kernel for non-graphics GPGPU processing), which is a function applied to each element. The fewer dependencies between streams promotes higher computational throughput. To give you a difference between a CPU and a GPU a 6-core CPU may have twelve (12) threads and a GPU may have fifty-five thousand (55,000) threads all capable of running in parallel.

Memory Size (see table above)

Memory Interface (see table above)

Memory Bandwidth (see table above)

SLI/CrossFire functionality (see table above)

How do power consumption affects PS (Pixel Shader) requirements?

This is dependent on many things but in general one looks at how much energy it takes to render a frame for an amount of time that it takes to do the render. Low energy, fast render will be the most efficient for a given application.

How do higher floating point precision affect output/color etc?

The use of double precision is needed for particular computations that are sensitive to round off error. For example, rigorous coupled wave analysis (RCWA) Maxwell Equation solvers used in electromagnetic field analysis (physics) need double precision to maintain accuracy. For visualization this is not much of an issue and won’t be considered here.

HDR support - does it affect end users?

The use of high-dynamic-range (HDR) to render images has changed computer graphics forever. All the cards we evaluated use Shader 4.0 or greater and can fully handle the HDR of 1,000,000,000:1 (10^9:1). This means it can capture a range of illumination from starlight to sunlight with higher color precision and a wider color gamut. It also means that pixels can be mapped directly to radiance opening a whole new universe to the graphics artist. It allows image based lighting: HDR photographic images as light sources, HDR light probes and global illumination. Plus it enhances image editing: Exposure /tone mapping and advanced accurate compositing.

The issue is that it is memory bandwidth and file size intensive (read expensive). To explain, low dynamic range images store 8 bits per color component, i.e., 24 bits per pixel (bpp) for RGB. These images can only represent a limited amount of the information present in real scenes, where luminance values spanning many orders of magnitude are common. To accurately represent the full dynamic range of an HDR image, each color component is stored as a 16-bit floating-point number. In this case, an uncompressed HDR RGB image needs 48 bpp. To reduce the processing cost it became necessary by reducing bandwidth and storage requirements we use compression that decompresses on-the-fly at the GPU, with the current champions using 6:1 compression with Direct X 11.

To truly use HDR to the limits of their technique what the practitioner needs is a graphics card that is Shader 4.0 or better and DirectX 10 or above, preferably above.

Software Support

Shader Model

Shaders at their simplest are software or hardware-based instruction sets that program the GPU to describe the visual effect at each pixel on a screen within a scene to calculate the rendering effects within the (rendering) pipeline. Today the more flexible and exceedingly customizable programmable shaders have mostly replaced fixed function shaders pipelines that only allowed a restricted set of geometry transformations and pixel shading effects functions. Shaders are written to apply transformations to a large set of elements concurrently, for example, to each pixel in an area of the screen, or for every vertex of a model making them ideal for parallelization that the GPU design takes to great advantage.

DirectX, X=3D and OpenGL use three types of shaders:

· Vertex shaders are run once for every vertex given the GPU. They are used to map 3D information to the 2D screen and provide a depth value for the Z-(i.e. depth)-buffer. Vertex shaders manipulate properties such as position, color, and texture coordinate, but they cannot create new vertices. The vertex shader output goes to the next stage in the pipeline, which is either a geometry shader if present or otherwise the rasterizer.

· Geometry shaders add and remove vertices from a mesh. Geometry shaders generate geometry procedurally or to add volumetric detail to existing meshes that would be too costly to process on the CPU. If geometry shaders are being used, the output is then sent to the rasterizer.

· Pixel shaders of Direct3D language or arguably more correct OpenGL’s fragment shaders calculate the color of individual pixels. The input for these shaders comes from the rasterizer, which fills in the polygons being sent through the graphics pipeline. Pixel shaders are typically used for scene lighting and related effects such as bump mapping and color toning. Pixel shaders are often called multiple times per pixel for every object that is in the corresponding space, even if it is occluded where the Z-buffer will sort these out later.

Today the unified shader model unifies the three aforementioned shaders in OpenGL and Direct3D 10 allowing again for greater flexibility and scalability.

[Ref quote: “A shader is a set of software computing instructions, which is used primarily to calculate rendering effects on graphics hardware with a high degree of flexibility. Shaders are used to program the graphics processing unit (GPU) programmable rendering pipeline, which has mostly superseded the fixed-function pipeline that allowed only common geometry transformation and pixel shading functions; with shaders, customized effects can be used.” [http://en.wikipedia.org/wiki/Programmable_shader#Parallel_processing]

“Shaders are written to apply transformations to a large set of elements at a time, for example, to each pixel in an area of the screen, or for every vertex of a model. This is well suited to parallel processing, and most modern GPUs have multiple shader pipelines to facilitate this, vastly improving computation throughput.” [http://en.wikipedia.org/wiki/Programmable_shader#Parallel_processing]]

[Ref quote: “Types of shaders

The Direct3D and OpenGL graphic libraries use three types of shaders.

· Vertex shaders are run once for each vertex given to the graphics processor. The purpose is to transform each vertex's 3D position in virtual space to the 2D coordinate at which it appears on the screen (as well as a depth value for the Z-buffer). Vertex shaders can manipulate properties such as position, color, and texture coordinate, but cannot create new vertices. The output of the vertex shader goes to the next stage in the pipeline, which is either a geometry shader if present or the rasterizer otherwise.

· Geometry shaders can add and remove vertices from a mesh. Geometry shaders can be used to generate geometry procedurally or to add volumetric detail to existing meshes that would be too costly to process on the CPU. If geometry shaders are being used, the output is then sent to the rasterizer.

· Pixel shaders, also known as fragment shaders, calculate the color of individual pixels. The input to this stage comes from the rasterizer, which fills in the polygons being sent through the graphics pipeline. Pixel shaders are typically used for scene lighting and related effects such as bump mapping and color toning. (Direct3D uses the term "pixel shader," while OpenGL uses the term "fragment shader." The latter is arguably more correct, as there is not a one-to-one relationship between calls to the pixel shader and pixels on the screen. The most common reason for this is that pixel shaders are often called many times per pixel for every object that is in the corresponding space, even if it is occluded; the Z-buffer sorts this out later.)

The unified shader model unifies the three aforementioned shaders in OpenGL and Direct3D 10. See NVIDIA faqs.” [http://en.wikipedia.org/wiki/Shader]

OpenGL version

Open Graphics Language is an open source C programming language for visualizing 2D and 3D data. [ref: http://en.wikipedia.org/wiki/OpenGL]

DirectX

“Microsoft DirectX is a collection of application programming interfaces (APIs) for handling tasks related to multimedia, especially game programming and video, on Microsoft platforms. Originally, the names of these APIs all began with Direct, such as Direct3D, DirectDraw, DirectMusic, DirectPlay, DirectSound, and so forth. The name DirectX was coined as shorthand term for all of these APIs (the X standing in for the particular API names) and soon became the name of the collection. When Microsoft later set out to develop a gaming console, the X was used as the basis of the name Xbox to indicate that the console was based on DirectX technology. [1] The X initial has been carried forward in the naming of APIs designed for the Xbox such as XInput and the Cross-platform Audio Creation Tool (XACT), while the DirectX pattern has been continued for Windows APIs such as Direct2D and DirectWrite.

Direct3D (the 3D graphics API within DirectX) is widely used in the development of video games for Microsoft Windows, Microsoft Xbox, and Microsoft Xbox 360. Direct3D is also used by other software applications for visualization and graphics tasks such as CAD/CAM engineering. As Direct3D is the most widely publicized component of DirectX, it is common to see the names "DirectX" and "Direct3D" used interchangeably.

The DirectX software development kit (SDK) consists of runtime libraries in redistributable binary form, along with accompanying documentation and headers for use in coding. Originally, the runtimes were only installed by games or explicitly by the user. Windows 95 did not launch with DirectX, but DirectX was included with Windows 95 OEM Service Release 2.[2] Windows 98 and Windows NT 4.0 both shipped with DirectX, as has every version of Windows released since. The SDK is available as a free download. While the runtimes are proprietary, closed-source software, source code is provided for most of the SDK samples.

Direct3D 9Ex, Direct3D 10 and Direct3D 11 are only available for Windows Vista and Windows 7 because each of these new versions was built to depend upon the new Windows Display Driver Model that was introduced for Windows Vista. The new Vista/WDDM graphics architecture includes a new video memory manager that supports virtualizing graphics hardware to multiple applications and services such as the Desktop Window Manager.”[ http://en.wikipedia.org/wiki/DirectX]

Important features for GPU Rendering

There are numerous rapidly maturing GPU rendering applications on the market today moving us closer and closer to full scale implementation. Currently today most of these applications only use a single GPU card. The reason for the movement to the GPU is the potential speed gain. In an ideal world, a program running on a GPU will run 2.5X faster than the ideal version of that software running on a 6-core CPU; in practice there are very few ideally programmed CPUs so the potential speed gain can be much orders of magnitude larger than that. And not being a programmer, it is clear that while there are restrictions to programming the GPU, clever people are kicking the CPU in the pants.

So what will you need to get the most value out of these new GPU-based applications?

Memory: just think if you need 4 GB of main memory to do a rendering now you are going to need roughly the same GPU memory to move that render to the video card.

Further, the card should be able to use DirectX 10 or higher and would be ideal to have hardware tessellation. And some applications may need CUDA or in the future OpenCL.

It has never been truer the user will need professional cards because Nvidia and ATI simply or going to be focused on making these the cards of choice for GPU-rendering.

Who will win this race to the render farm (in a workstation)?

Floating point processors are arrayed in graphics cards to do stream processing of data used in this article for visualization. Nvidia and ATI use significantly different chip architectures to accomplish the same thing. For the equivalent GPU ATI chips have many shader processors that with optimized code can process up to five simultaneous instructions whereas Nvidia processes them serial through the shader processer. The issue is the level that the 5X processing capability of the ATI can be fully utilized is apparently nontrivial so currently at the end of the day the typical capability will be of the same order as the Nvidia processor. Then it becomes other parts of the chip functionality and how the software utilizes it that will determine ultimate performance. So who will win, I think we will just have to wait and see. [ref: pages 3-8 in http://www.anandtech.com/show/2556/1]

Conclusion

AutoCAD

From an overall performance rank, based on the average results from all three test scenes, there were some interesting results. In AutoCAD 2010, the best overall card by a fairly large margin was the ATI Radeon HD5870, due primarily to its performance on the smaller chair model in wireframe and flat shaded mode. In AutoCAD 2011, the NVDIA FX4800 ranked highest due to its ability to perform highly on most of the tests and hold its ground in wireframe mode.

In wireframe mode, the consumer cards performed two to seven times better than any of the professional series cards. In hidden line mode NVIDIA's Quadro cards performed best, especially in AutoCAD 2011 where there appears to be some significant optimizations in the NVDIA performance driver. In the realistic shading mode, ATI cards and the GeForce cards performed best with the smaller chair model, with NVIDIA's Quadro cards falling slightly behind in AutoCAD 2011, but significantly behind in 2010. The Quadro cards did excel on the larger models in AutoCAD 2011. In the conceptual rendering mode, the ATI cards and the GeForce cards performed nearly identical. The only cards in this mode that stood above the rest were the Quadro cards in AutoCAD 2011 with a three to six fold increase in performance. Although Flat mode is not accessible from the AutoCAD interface directly, we did test this mode. The two top FirePro cards and the consumer cards performed best on the smaller chair model, with the Quadro cards performing better in AutoCAD 2011 for the other tests.

The results were not consistent between tests or versions, which makes selecting the best card for AutoCAD the most difficult. It's clear that the NVDIA performance drivers coupled with AutoCAD 2011 saw the largest performance increases. Most people will use the wireframe and hidden line modes, so for the purposes of this recommendation, I would put more weight on those results. If you do most of your work in wireframe model, then one of the consumer cards is the best value and performance. If you do more of your work in hidden line mode then the FX1800 is the best value for the performance. If you use a difference mode, or prefer the benefit of some of the professional series cards, then I would suggest looking at the price, performance and features and determining which works best for you.

3ds Max

Looking at the overall rank of all tests averaged, the Quadro FX3800 offers the best all around performance in both 3ds Max 2010 and 2011. Looking more closely at the viewport modes however shows that there is very little difference between any cards regardless of manufacturer or model. Unfourtunately due to the underlying architecture of 3ds Max, the graphics pipeline is bound heavily by the CPU which prevents it from taking full advantage of the GPU. Hopefully future versions of the software will address this. The Quadro performance drivers give a slight edge over the ATI cards and GeForce cards in wireframe mode, but in most cases the differences are quite small in our tests, and generally in the range of 5-10 frames per second more. In Hidden line Smooth & Highlights mode, the results were for the most part very close to each other. Based on its ability to outperform on the Tunnel test, my recommendation would be for the GeForce GTX480 card.

SketchUp

In SketchUp the highest overall ranking card is the GeForce GTX285, however if you look at the frames per second for the various tests, every card performed almost identically. It's clear that SketchUp does not in any way take full advantage of the capabilities of any display card. As you are likely using other 3d applications or CAD software, I would base my selection on one of those performance metrics instead as SketchUp will not be affected by your selection.

CineBench

CineBench is the only test that performed most linearly compared to the capabilities of the display card. This should not come as a surprise however as these particular test uses a lot more of the cards real-time rendering capabilities. While it is a valuable test, it is least applicable to most people in the architectural visualization industry as it is testing capabilities of the card that most will never use. The highest performing cards are ATI's top two FirePro cards followed by NVIDIA's GeForce cards. The performance increase observed on the FirePro cards was quite significant, and nearly double all of its competitors in this review.

GPU Compute

Although I mentioned earlier in this article that we would not be evaluating the compute capabilities of these cards, if you are in the market for a new card and plan on experimenting with GPU rendering, I would be remise if I did not mention that the card with the most RAM and stream processors should be at the top of your list, this may be opposite to the selection you might make from a purely viewport performance standpoint. Once these GPU renderers are publically released we will test them further.

Community Feedback

One of the things that is very hard to test in an isolated environment is driver and hardware reliability. These types of questions are best answered through feedback of an entire community. For this reason we've created a very short survey that readers can take to provide individual information on their hardware and driver experience.

http://www.surveymonkey.com/s/graphics-card

The results of this survey can be seen HERE Please note that these results are not necessarily statistically accurate. We simply provide this survey and results data as a rough indicator of our community's experience.

About RenderStream

RenderStream is a supplier of high-end workstations, render farms, high-speed multi-tiered storage, high-speed networks and GPU based high performance computers. We focus largely on providing optimized hardware solutions for the CG industry. Our approach is unique in that we combine industry leading hardware experts and talented 3D software specialists to test, tweak, adjust and perfect every computer we ship.

If you would like to post comments or questions about this review, please visit our forum

About this article

CGarchitect has just wrapped up one of the most intensive and exhaustive reviews we have ever done. Our graphics card round up reviews the top graphics cards from NVIDIA and ATI and evaluates performance in the top apps in our industry as well as how professional series cards stack up against consumer cards.