Interviews

Interview with Arnold Gallardo, Visual Content Creator

Interview with Arnold Gallardo, Visual Content Creator

Arnold Gallardo, author of '3D Lighting: History, Concepts, and Techniques' is an industry veteran and an expert in the field of Global Illumination and Radiosity. Arnold talks to us about his experiences in the fields and relates his knowledge of current technologies as well as those to come.

CGA: Could you introduce yourself and tell us a little bit about your company?

The title that I hold is 'Visual Content Creator' and that covers most of what I do since many disciplines are involved from photography, illustration to computer graphics/animation. I also deal with graphic design and layout as well as scientific/anatomical illustrations aside from doing architectural CG.

CGA: Tell us about your background. How did you get into the Architectural CG industry?

I am a traditionally trained artist/draftsman. I remember the days of using T-squares, triangles and French curves. I am also a fusion welding metal sculptor using mainly ferric materials. I am also a still photographer and printer. The old cliché' about traditional training and talent is more valuable to CGI is something I believe as well as being exposed to other disciplines and not have one field's tunnel vision.

My background relating to the Arch CG industry goes back to my traditional training as a draftsman and my personal interest in Architecture. I became serious about doing Architectural visualization when I bought Lightscape to simulate and study traditional lighting setups for eventual commercial photography applications. However, another side to this was the experience of seeing in a magazine the Vermeer inspired two-pass rendering (radiosity+raytracing) image done by John Wallace, Michael Cohen and Donald Greenberg. I could not forget that image and could believe it was done with computer graphics just like the early ones by Eric Haines.

CGA: You are well known for your knowledge in CG lighting, how did you become involved with this particular aspect of the industry?

My knowledge on CG lighting and the technical side that comes with it mainly came from my early disappointments with understanding Lightscape and the inability to get satisfying image renderings from raytraced and radiosity solutions images. Since some of my questions about my use of Lightscape bordered on 'proprietary information', Lightscape Technologies (now Discreet Logic) cannot provide this information to an end user like me, I was forced to research and study this field alone since understandably.

I pretty much went back and read almost all the research papers on raytracing and radiosity I could find. Back then I had to convert most of the postscript files into PDF to be able to read them on the Windows platform. I owe a debt of gratitude to all the CG researchers who have placed their papers online for all to read and for others who have answered private queries.

|

|

|||

| Radtest1 - Arnold Gallardo Click to enlarge |

Radtest3 - Arnold Gallardo Click to enlarge |

Most of my difficulties with Lightscape I later found out were caused by my misunderstanding of radiosity and the inherent demands associated with it during the 3d mesh modeling stage. Although my expectations then were never of the 'magic render button' kind, it was frustrating since you really do not know why you are getting the kind of results you are getting and although the manuals were wonderful in terms of teaching you how to utilize the software, I needed to know how the technology works so I can make my solutions process faster and more efficiently. The issues I encountered at this stage were not just software parameters related but mainly related to modeling and specifically, the way modeled in 3D. As most 3D artists know, for rendering technologies such as raytracing, how you model the forms/shapes and surfaces do not matter much as long as you apply the appropriate shader/textures and other material properties settings. With radiosity, the situation is very different, you have to explicitly indicate occlusion and boundary areas and outline those with polylines. Having quadrilaterals for all surfaces also helps speed up the radiosity processing specially for the irregular polygon areas which needs to be manually divided into smaller mostly quadrilateral sections. These things are not so obvious to an end user and most often, this kind of workflow are not part of the normal 3D modeling process specially if you use CAD software. The issue of 'solid vs surface modeling' is very obvious when dealing with radiosity. Ultimately, this knowledge became a 'need' because of the rendering situations I needed to solve and to optimize the modeling-rendering workflow.

CGA: How have you seen the architectural CG industry grow, with respect to lighting, since you have been involved in the industry?

The industry has grown a lot in recognition of CG as a viable tool for lighting analysis and evaluation. Lighting manufacturers now have IES data online along with 3D luminaire mesh 'blocks' for 3D renderer/applications.

Also before it was acceptable and even preferable to just use traditional CG lights to do the visualization to avoid the extensive computer resources demands of using radiosity/global illumination, today this is welcomed if not expected. Global Illumination really has come a long way. It was much easier then for most people to say 'its too much work and preparation if not rendering time compared to regular CG lighting setups'. Global Illumination then was seen as burdensome and an 'overkill' when simpler and faster techniques exists which gives acceptable and comparative images.

CGA: GI and radiosity have become fairly commonplace over the past five years, what do you feel are the current limitations of these technologies for achieving accurately lit scenes?

The current limitations of GI/radiosity are several and these mainly pertain to perceptual and 'light to material interaction' transfer issues. There is also the question of 'media participation' and the 'near-field photometry' problem which is not often discussed.

Perceptual issues mean that we need to have some way of presenting an rendered image as expected or desired by the end viewer as dictated by the particular scene location/situation and the time component. Since our eyes adjust so well and so quickly when there is a light change our impression of the real world is so ingrained and seamless that we can tell something is off or wrong in a render but not necessarily know why. Tonemapping is a perceptual issue that is causally encountered when it should be emphasized and explained thoroughly as to its capabilities and limitations. Many implementations of it are handled through 'brightness and contrast' parameters without explaining necessary concepts such as 'clipping' and 'tonal compression'. There have been numerous research on perceptually-based tonemapping such as Greg (Ward) Larson's or Tumblin/Rushmeir's but I have not seen these applied to a commercial GI/radiosity application and these approaches have limitations of their own as well. Also, the issue of how to plot and display the computed luminance values into a limited display device (monitor) are not always explained along with the difference of using a 'linear vs logarithmic' distribution. Ultimately, the limitations of our knowledge about the way the our visual system works is the big barrier, all we can do know is to create models of how we see and test that in controlled conditions and see the results. The problem is that we do not live in a 'controlled environmental conditions'. We need to address the issue of how our visual system really 'expects' before we can address the rendered image 'presentation'. I do not want to have an automated perceptually-driven button 'black-box' where I have no control over the end results but I do want to see options and the possibilities of the computed luminance values before a final rendered image is done. This is because what we see would always be subjective and personal and differs from one person to another but we do agree if a particular image is 'under-exposed' or 'washed out' or even 'looks wrong'.

The second part is the limitations of our knowledge about how light interacts with real world materials. Although we now see the implementations of complex shading models such as BRDF (bi-directional reflectance distribution function) like in LW6.5b, we are still far from getting really automated realistic renderings due to our limited real world material reflectance and transmission sampling. For years we have used simple shading models (Phong, Gouraud, Lambertian etc.) to model the way the light interacts with material and these have worked for most purposes but these shaders really model ideal light-material interactions. This one of the reasons why we need to 'tweak' our scene parameters to get a better rendering and it is why it is still a creative process toady and into the foreseeable future. Right now, not all available renderers can handle 'specular to diffuse' light transfer (like the light bouncing off a mirror and illuminating the area where light is reflected) as well as deal with 'directional diffuse' (directionally-biased reflections either due to light/viewer position or surface material properties) light transfers.

We need to have an extensive database of all of the most common materials in use today and this is a daunting task if not impossible. Most of the materials that are well known today are either classified military paints or multi-layered composite paints like those use on automobiles. This 'material sampling problem' is further complicated by the need to have expensive, predictable and reliable gonioreflectometers along with a competent operator. Of course, complex light interactions can be done analytically (mathematical modeling the physics) but most surfaces are manufactured and is subjected to coatings, finishings or could be 'unknown' or develop uneven chemical reactions (oxidation etc.) so it is preferable to sample these material directly than create mathematical models for them. Implementing a fast and efficient complex 'light to material transfer' will be the next challenge along with the need to reconcile this data with the way our visual system works.

'Media participation' is the light interaction with intervening materials such as particulate matter (smoke, dust etc.) or suspension material (fog, mists etc.). Currently most CG GI/radiosity assume that there is no media participation meaning that the light transfer is modeled as if the scene is in outer space where there is no air nor water vapor to create subtle effects such as attenuation and absorption to dramatic effects such as volumetrics. What is needed is for the render engine to accurately model the light transfer with media participation since this is the way it is in the real world and it does change the way we experience light as the environmental conditions change.

Lastly, there is the almost hidden issue of 'near field photometry' which is something that every 3D artist that uses CG for visualization and analysis should know, most IES data uses 'far-field photometry' which assumes the luminaire is a point source which means that some indirect illumination luminance will not be accurately predicted by CG visualization. This is because the same luminaire in reality and in the virtual world is placed so close to an adjacent surface (like 10-18 inches away) and at this distance the same luminaire is definitely not behaving like a point source light. 'far-field photometry' also assumes that the 'luminaire to surface' distance is at least five times of the largest luminaire dimension.

Some radiosity renderers do take this 'near-field photometry' problem into consideration and subdivides the light source but others do not and sometimes they do not even tell you anything if you asked specific questions such as these. Surely, metering the luminaire closely will solve this problem but to be realistic, you will need to sample the luminaire for each distance that you will place it on and this is not practical. There is progress being made on the luminaire data gathering stage using a new kind of photometer but we all need to have this 'near-field photometry' problem tackled in the CG end as well. If this problem is solved, we will be able to have more accurate reflection and specularity of a particular luminaire and the only question then is the amount of rendering time required to do such a virtual simulation since the amount of computer processing and resources required might be 3x to 4x as much if not more compared to using simple luminaire models (point source, area light etc.). We cannot continue using far-field photometric web data in near field situations and expect it to be an reliably accurate virtual scene model.

CGA: What are your thoughts about developments with standalone GI renderers? Do they have a place in the architectural market or have they been developed more for special F/X houses?

I think that the development of standalone GI renderers is good overall. They liberate us from the limitations of the built-in renderers in our 3D or CAD application. Some of them are very good at rendering believably acceptable scenes as evident in the euphoria over Marcos Fajardo's 'Arnold-Global Illumination Renderer' (now PMG's Messiah:render) rendered stills and animations. The subsequent explosion of GI after these 'Arnold' images shows the maturation of GI/radiosity in the eyes of 3D community. I think that since GI or more specifically 'monte-carlo based renderers' has all the benefits of radiosity (complete light transfer, color bleeding etc.) without its burdens (explicit geometry modeling, long solution processing etc.) that it has a user friendly process and is accepted readily into one's workflow. Monte Carlo based global illumination renderers are geared for moving lights and objects while radiosity requires 'static scenes' is another reason. Most Monte Carlo-based renderers have addressed the problem of 'noise' and 'flicker' (when animated) which is good but there are different ways of achieving this and they are not all the same in quality.

Other standalone renderers (like Brazil aka Ghost) also specifically model camera optical effects like 'bokeh' (out of focus specular/light renditions) which is wonderful while others uses advanced algorithms like Photon Maps (3D virtualight) to create the final image. Camera optical effects specially with depth of field, changes the way we view CG renderings, instead of tailoring the final render as to how it is expected by the naked eye, optical effects like bokeh now forces use to use our photographic experience and exposure (through stills and motion picture) to evaluate and accept an image. If we narrow our visual expectations to our experience with the way the camera renders what we see then the CG rendering process has been simplified a bit since photography 'simplifies' as well as 'deceives' us of out expectations with an image representation. Caustics too has become a 'buzzword' but how many wine glass renderings could one make and does one really need such a capability? How many scenes do have special glass (like stained glass) in them that requires accurate caustics rendering? I really would rather have a 'specular to diffuse' function instead.

The current available GI standalone renderers might be adequate for architectural CG purposes if the end result is only for visualization and will probably not be adequate for lighting analysis applications although some are capable of doing this very well like Inspirer (Integra Co. Jp). These renderers have been so new that there were only several comprehensive comparative tests have been made while others are not accessible at all. Radiosity-based renderers are more mature and predictable by nature and has the advantage of being 'view independent' whereas monte-carlo based renderers will always have to recompute everything again. Also solving the 'near-field photometry' problem with radiosity is already here for some renderers without needing a comprehensive near-field photometry database for each luminaire that a 'Monte Carlo-based renderer requires. To decide if the new standalone GI renderers will be beneficial to the arch-vis community if not outright replacements for radiosity-based renderings remains to be seen since predictable and consistent results are needed. On this end it becomes an 'artist vs engineering need' situation. An artist only needs it to look great while an engineer will only care about its speed and accuracy.

CGA: Where do you see the future of architectural rendering and how will new rendering technologies affect that future?

I see a trend towards physically based light transfer (light physics) on complex material properties settings (BDRF etc.) being combined with realistic tonal range (perceptually-based tonemapping) together with media participation so light effects like attenuation and volumetrics will be accurately portrayed and rendered.

In radiosity there are several things happening, the emergence of new techniques (multi-clustering) with old algorithms will make it possible to have millions of polygons in a scene and have the radiosity solution processed in minutes with reasonable RAM and HD resources. Also it will be possible to have explicit shadow boundaries without needing to have high resolution meshes. Moving objects and lights will also be made possible with radiosity, and the 'static scene' requirement will no longer apply.

Hybrid approaches (radiosity-monte-carlo) will make 'radiosity solution' processing faster and create a more complete global illumination solution. As eluded above ultimately, we will have to choose between a 'artistically geared' GI solution and an 'engineering oriented' GI solution along with the GI researchers. The ideal thing is to merge both approaches or at least have each of these two field's needs addressed in each other's application.

CGA: You have recently published the well-publicized book entitled “3D Lighting: History, Concepts, and Techniques ”. What motivated you to write this book?

|

||||

| CoverpageF3B - Arnold Gallardo Click to enlarge |

This book emerged because I wanted to write a Lightscape book and a perception emerged that it is a 'niche market' and I got asked to do a different book instead. When I finally got down to doing this book my main motivation is to deviate from the normal way of introducing CG lighting concepts because many CG books jump into CG right away without acknowledging the debt and history that it has to photography as well as to the psychology/physiology field. So I had to start out with the nature of light then into the eye physiology and perception then into photography and only then deal with computer graphics. I want the reader of this book to understand more of the 'whys' than the 'hows' or even 'what' is being done because once you understand the 'whys', the 'how' and the 'what' is motivated by the 'why'. It is just like the chair design (or house ) problem faced by architects/designer. Once you know why a chair exists(meaning the essence of it) then the how and what it is follows specially for lighting, there are no rigid rules of application just motivations to solve a particular problem. The book that I really wanted to write would have needed an a additional thousand pages or more to cover all the necessary field and information with tutorials. In this book, I have identical tutorials for three (3) different 3D applications for each concept shown and that is a daunting task in itself.

CGA: Are there any new books in the works?

I am not currently working on any book but I have been asked to do application specific books instead of a general book such as a lighting book. But if there ever is a second lighting book, I know that it will have to be more scene project oriented but retaining the lighting motivation angle instead of a hand-holding walk through tutorial.

I would love however to still be able to do a Lightscape book since that is very much needed although the Lightscape folks at Discreet have been very good at providing technical support and resources for new users. It is so unlike the old days when had limited resources and people to ask for help. But I do feel that we need to project oriented reference book for it instead of just the amazing webforum/FAQ that Discreet has on its website.

I also want to do a modeling book on organic and hardware/architectural modeling. I specially would like to demonstrate the modeling techniques that I use that is geared for radiosity processing since most people do not do this.

CGA: What have been your biggest challenges both past and present, with regards to computer renderings?

The biggest challenge has always been the lack of computer power since we do scale up our work as we gain faster processors. We tend to do more detailed meshes along with larger texture maps and procedural shaders etc. as we upgrade our computers since we can do now what we cannot do before and we hit that brick wall earlier than we expect. Even with network rendering we still encounter computer performance limitations.

The other challenges that surfaced is the difficulty of matching swatches in CG specially if all you have is a scan or digital photo and you have not seen an actual sample. You will have to go back and forth and do render tests from your 3D/CAD application to your image editing program until you get it right. Recently however, I have discovered Darktree procedural shaders and it solved most of my problems specially for outdoor renderings since I also avoid the problem of texture map resolution along with UV mapping situation.

CGA: Which rendering are you most proud of and why?

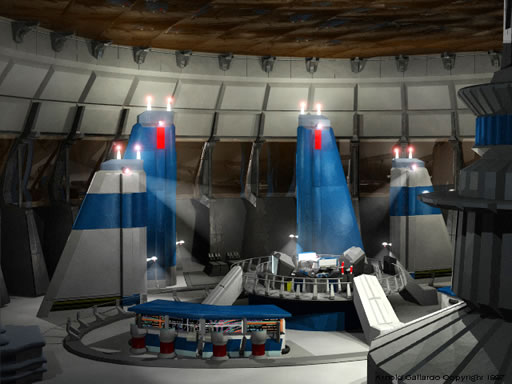

I have many favorite renderings but I particularly like the one I did collaboratively with an architect (Mario Grajales Leal) for a competition. This rendering only has regular CG lights on it to get a more illustrative impressionistic 'look' rather than a straight forward digital simulacrum. I had limited time to work on it and was involved in some of the architectural problem solving as well as on the luminaire design. So knowing the motivation for this building's design helped a lot in deciding the 'look' and 'feeling' for the visualization. Today, it is not often that a CG artist is given a part in the architectural decision process and we are treated like more like editorial graphic illustrators than partners and part of the architectural workflow to convey and bring about a concept and a vision.

|

||||

| ConeMGLproject1 - Arnold Gallardo Click to enlarge |

CGA: What software do you currently use and have you used in the past for computer renderings and why have you chosen those particular applications?

For radiosity destined scenes Lightwave 5.6 is mainly used because it can optimize the geometry for radiosity processing either automatically or manually via the Modeler. I need not say about the gorgeous beauty of Lightwave's render engine which it is famous for. Its ability to handle large datasets is great and the constant criticism of having to model in 'Modeler' and do texture, lighting and camera setups in 'Layout' is something that I like a lot that is not there in other applications. If there is a need to change a section or a element of a scene, all that needs to be done is to tweak or create a new mesh in 'Modeler' and then do a load and replace mesh in 'Layout' so it is so easy to change and switch scene components. I also can continue to model in 'Modeler' while 'Layout' is rendering which saves time.

|

|

|

||

| Lvs-lw5- Arnold Gallardo Click to enlarge |

Padayseries4 - Arnold Gallardo Click to enlarge |

Colonylwlarge - Arnold Gallardo Click to enlarge |

Of course, Lightscape 3.2 for its wonderful radiosity/raytracer engine. So far there is no commercial progressive-refinement software that approaches what LVS can do in terms of getting subtle indirect illumination and color bleeding along with the ability to handle large scenes and have total control over most of the lighting/luminaire and radiosity

parameters.

|

|

|||

| Newsacristy2 - Arnold Gallardo Click to enlarge |

LADlvsproject - Arnold Gallardo Click to enlarge |

trueSpace 5.1 is another application that I use. Some people think its just a 'pretty GUI' mid-range 3d application incapable of doing serious renderings but its Lightworks render engine along with the shaders can generate fast realistic renderings. Its 'polygon draw' tool works well for me since it acts like a manual polygon bisector where you can literally draw 'stencils' on polygon faces. Third party shaders such as those by Primitive Itch (Shaderlab) and Urban Velkavrh (Shady) expanded the possibilities of tS 5.1 since they offer procedural shaders along with render engine related enhancements that are not on the tS box package.

|

||||

| Dutch_Interior - Arnold Gallardo Click to enlarge |

3D Studio Max r3 also has been used along with 3D Studio Viz. The great thing about Max/Viz is that one can solve a problem in numerous ways with many options and possibilities. The comprehensive material properties editor in Max/Viz helps a lot when dealing with simulating complex surfaces. Its hybrid polygon/NURBS toolset also helps a lot when there is a need for parametric forms that can only be done with NURBS. The light 'multiplier' in Max/Viz is wonderful since its intensity is easily change in predictable steps and if you combine this with the 'Attenuation Parameters' you have the making of a total CG lighting control parameter which should be the way lighting is. With Max's ability to use other render engine inside it makes it easier to use Renderman compliant renderers like BMRT/PRMan via Animal Logic's Maxman and Blur Studio's/Splutterfish Brazil (aka RayFx/Ghost). The different GUI modes in Viz also makes the scene creation quicker along with the built-in Camera Tracking which is very useful.

I also have started using Merlin 3D. The wonderful thing in M3D is its real-time interactive lighting which is so close to the rendered image that you need not do many test renders if at all since the real-time display it shows is so close. So your lighting workflow is faster since its lighting setup is WYSIWYG with texture. Merlin 3D also can handle large scene datasets and still be able to have scene interactivity without an obvious slowing down in the . Its 'clean GUI' is task driven and workflow oriented which is good when you are doing something quick.

Darktree/Simbiont is another application that I have recently used. The wonderful and realistic standard procedural shaders that comes with the package has helped a lot since there is not more need to store and keep track of numerous texture maps and avoid the problem of UV map alignment and the flat reflection that a texture map generates even with Blinn or other complex shader type. Darktree users also are generous in sharing their own darktree shaders along with the Darksim principal people so the available procedural texture is always expanding. A major benefit of using darktree shaders if you are using multiple 3d applications is that they are cross compatible so a concrete darktree texture in LW is almost similar to the one rendered in Max/Viz. And lastly there is no more worry about getting 'pixelated' renderings when the camera gets too close to a polygon face with a texture map.

Deep Paint 3D is a effects/3D painting program that I also use. For situation where procedurals are not wanted nor applicable nothing beats the toolset in DP3D. The ease of painting directly on the 3d mesh avoids 'unwrapping' the mesh and then fooling around with the UV map to align it well later on. The many effects and brushes available means that it is possible to create the subtleties of a procedural shader look and pattern in a 2D texture. DP3D also works seamlessly with Photoshop, Max and now LW.

Polytrans is another application that has become indispensable because of its ability to import,export and convert 3d file formats along with its ability to deal with animation information. Without it, it would be difficult to work with several 3d data sources and move between them.

CGA: What do you not like to see in computer generated architectural work?

The main thing I have problems with most CG architectural work is the lack of full tonal range which shows the 'textured highlights' and the 'detailed shadows'. It is really something that I look. Some of the images I have seen mostly have 'gray toned' shadows if not has that 'pale look' to it on a well calibrated monitor which reduces the impact that the image has. Also if there is a mismatch between the background plate and the CG element, that just destroys the image. Lastly, I personally am not fond of renderings that uses texture map 'clipart' which creates a kind of 'assembly-line-3D-artwork-look' where anyone's images starts to look like everyone else's. Clipart texture map has its place but the way it being applied must be evaluated against the 'impression' and impact that is has on the final image. Actual swatch scans or photographs along with custom-made texture maps or procedural shaders is the way to go for most purposes.

CGA: What tip(s) can you give our readers to improve their architectural renderings?

There are only several issues that one needs to follow and these are in accordance with the workflow in doing an Architectural CG scene, optimized modeling, use the proper texture/procedural shader and spend more time in the lighting phase.

Optimized modeling means that one has to think about the final destination and use of what they are modeling. This means that if you know the final image is destined for a web streaming media format, you will be able to get away with less detail and maybe more texture maps to simulate architectural details and do not forget about using alpha channels/clipmaps. If you know that the end use will be in print or press then you will need to work more. Optimizing for radiosity by explicitly indicating occlusions and boundaries also helps a lot.

The texture map/procedural phase is the next critical thing one should consider. My doing a bit of research like browsing through a local home improvement store to actually see the sample materials or requesting swatches that will be actually used helps a lot since you can hold and play with the material and see how it interacts with light so you can approximate its behavior and 'look' in CG. One should not be using arbitrary texture maps just because that's the only one available, if you need to make it from your image editing program like Photoshop do it or scan the several actual materials yourself if possible. Then during the CG phase, use a varied set of the same material so that even if its all rosewood let's say, it will not have that uniform repeating all over your scene.

Lastly, spend 1/3 or more of your allotted time to dealing with lighting setups and evaluation. Most of the time this is an area where people spend their time the least on a architectural Cg project unless there is a heavy reliance on GI/radiosity. You need to have a 'feel' for the way the light models and 'bathes' the environment and to evaluate the psychological component there. Even if the reason is for lighting analysis, ultimately lighting in an architectural setting will affect moods because of the lighting intensity and color temperature situation. All of that can be conveyed well in the final image if one plans ahead. Remember that the best modeling in the world will look mediocre under bad lighting but mediocre modeling can be made to look good by great lighting. Lastly, if you can try to avoid the cold, sterile and desolate 'look' of most CG renderings the better it will be. However this depends also on the building's utility and purpose as well as the architect's intention.

CGA: What is your favorite link to visit on the web? (not necessarily CG related)

I am a news junkie so I visit mostly CNN and The Register (IT news). But the people that know me, they know that I have an enormous favorite folder (~100 megs so far) that functions as my own search engine..:) so there are many links that I visit. When you do research you tend to accumulate URLs quickly.

CGA: Which/What web based resources that you have found the most

informative?

Technical:

http://www.graphics.cornell.edu/online/research/

http://www.nzdl.org/cgi-bin/cstrlibrary?a=p&p=about

http://www.siggraph.org/education/

http://www.cs.utah.edu/~bes/graphics/gits/

http://ls7-www.informatik.uni-dortmund.de/~kohnhors/radiosity.html

http://www.cs.cmu.edu/afs/cs/project/classes-ph/860.96/pub/www/montecarlo.mail

http://www.graphics.cornell.edu/~phil/GI/

Lighting:

http://www.hike.te.chiba-u.ac.jp/ikeda/CIE/home.html

http://hovel:35108@lighting.lrc.rpi.edu/index.html

Papers/Researchers:

http://graphics.stanford.edu/~hanrahan/

http://www.acm.org/pubs/tog/editors/erich/

http://www.cs.utah.edu/~shirley/

http://nis-lab.is.s.u-tokyo.ac.jp/~nis/

http://w3imagis.imag.fr/Membres/Francois.Sillion/

http://www.research.microsoft.com/users/blinn/

http://www.graphics.lcs.mit.edu/~fredo/

http://www.graphics.cornell.edu/~eric/

http://www.cs.caltech.edu/~arvo/

About this article

Arnold Gallardo, author of '3D Lighting: History, Concepts, and Techniques' is an industry veteran and an expert in the field of Global Illumination and Radiosity. Arnold talks to us about his experiences in the fields and relates his knowledge of current technologies as well as those to come.